Debuting in 2026, Jensen Huang drops a 2.5-ton "nuclear bomb" at CES 2026

Show original

By:爱范儿

*There's a small surprise video at the end. This is the first time in five years that NVIDIA hasn't released a consumer-grade graphics card at CES. CEO Jensen Huang strode to the center stage at NVIDIA Live, still wearing last year's shiny crocodile leather jacket.  Unlike last year's solo keynote, Jensen Huang in 2026 packed his schedule. From NVIDIA Live to the Siemens Industrial AI Dialogue, then to Lenovo TechWorld, he spanned three events in just 48 hours. Last time, he announced the RTX 50 series graphics cards at CES. This time, it was physical AI, robotics, and a " company-grade nuke " that stole the spotlight. The Vera Rubin computing platform debuts: the more you buy, the more you save During the launch, the ever-playful Jensen brought a 2.5-ton AI server rack onto the stage, introducing the focus of this event: the Vera Rubin computing platform. Named after the astronomer who discovered dark matter, it has a clear goal: to accelerate AI training and bring the next generation of models sooner.

Unlike last year's solo keynote, Jensen Huang in 2026 packed his schedule. From NVIDIA Live to the Siemens Industrial AI Dialogue, then to Lenovo TechWorld, he spanned three events in just 48 hours. Last time, he announced the RTX 50 series graphics cards at CES. This time, it was physical AI, robotics, and a " company-grade nuke " that stole the spotlight. The Vera Rubin computing platform debuts: the more you buy, the more you save During the launch, the ever-playful Jensen brought a 2.5-ton AI server rack onto the stage, introducing the focus of this event: the Vera Rubin computing platform. Named after the astronomer who discovered dark matter, it has a clear goal: to accelerate AI training and bring the next generation of models sooner.  Typically, NVIDIA has an internal rule: each generation only upgrades 1-2 chips at most. But this time, Vera Rubin broke the norm by redesigning six chips at once, all of which have already entered mass production.

Typically, NVIDIA has an internal rule: each generation only upgrades 1-2 chips at most. But this time, Vera Rubin broke the norm by redesigning six chips at once, all of which have already entered mass production.

The reason: as Moore's Law slows, traditional performance improvements can't keep up with AI models' tenfold annual growth, so NVIDIA opted for "ultimate co-design"—innovating at every layer of every chip and across the entire platform simultaneously. The six chips are: 1. Vera CPU: - 88 NVIDIA custom Olympus cores - Uses NVIDIA spatial multithreading technology, supporting 176 threads - NVLink C2C bandwidth of 1.8 TB/s - System memory 1.5 TB (3x that of Grace) - LPDDR5X bandwidth 1.2 TB/s - 22.7 billion transistors

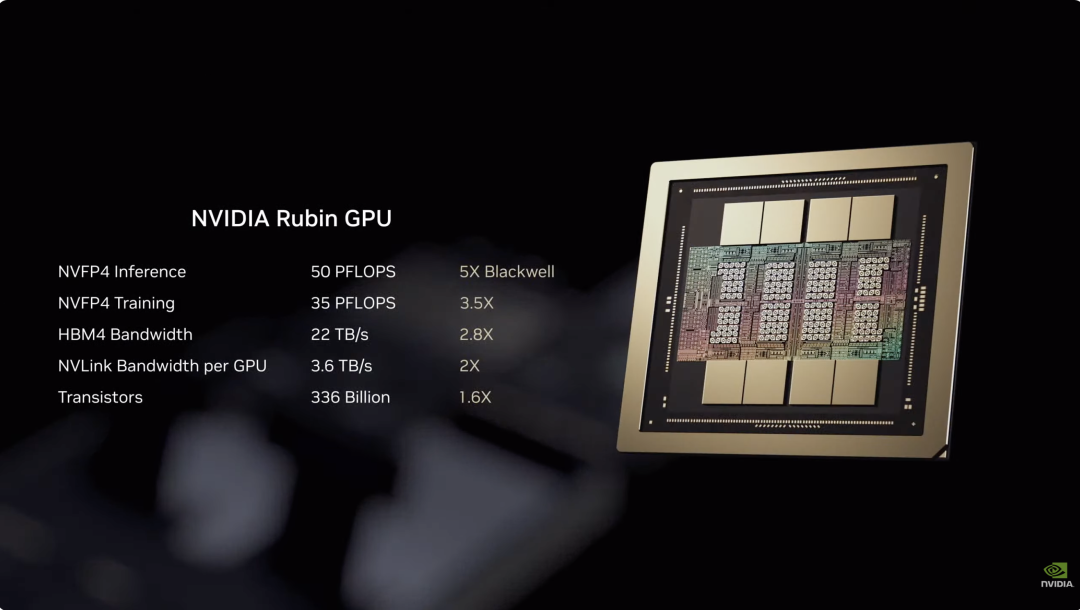

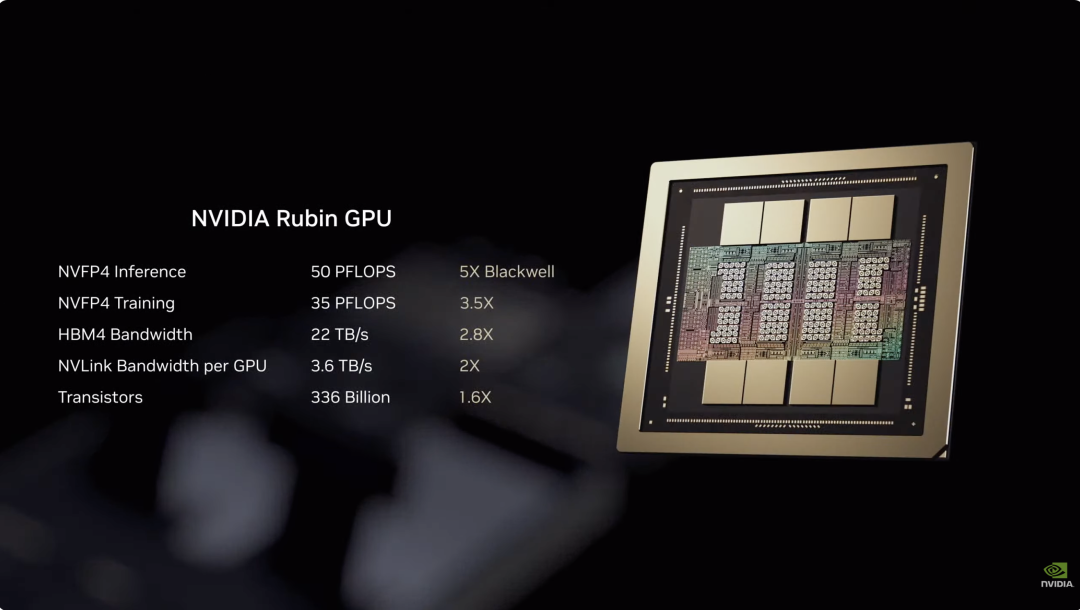

The six chips are: 1. Vera CPU: - 88 NVIDIA custom Olympus cores - Uses NVIDIA spatial multithreading technology, supporting 176 threads - NVLink C2C bandwidth of 1.8 TB/s - System memory 1.5 TB (3x that of Grace) - LPDDR5X bandwidth 1.2 TB/s - 22.7 billion transistors  2. Rubin GPU: - NVFP4 inference power of 50 PFLOPS, 5x that of previous-gen Blackwell - 336 billion transistors, 1.6x more than Blackwell - Equipped with third-generation Transformer engine, dynamically adjusting precision based on Transformer model needs

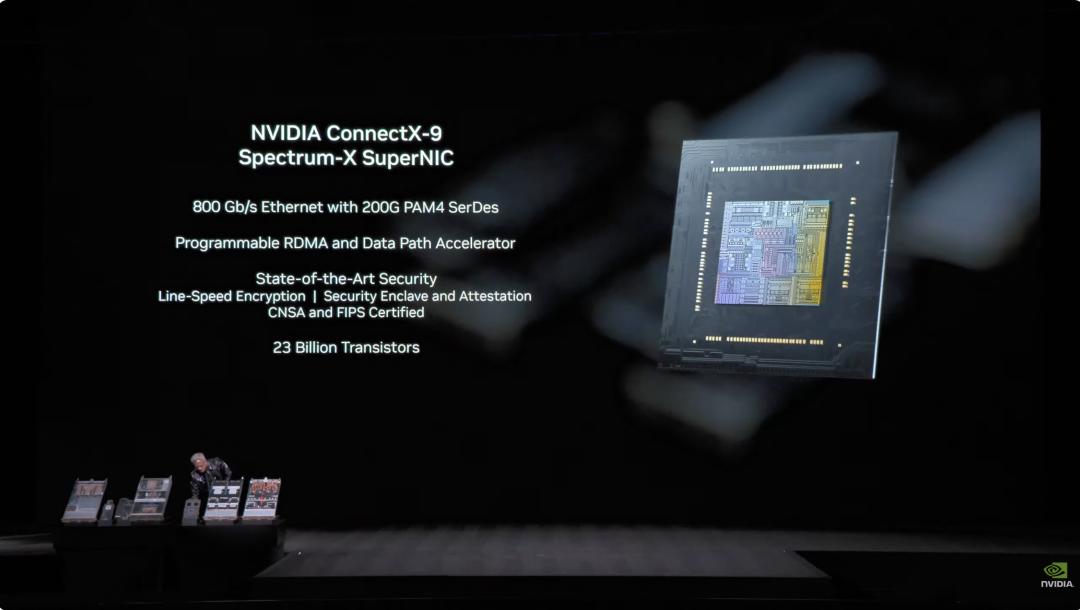

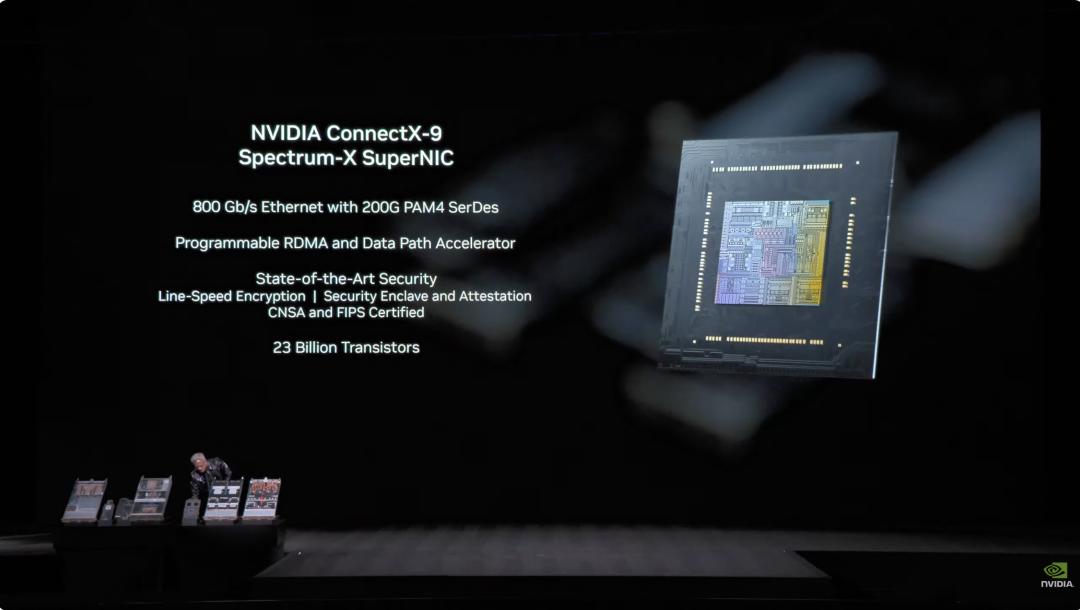

2. Rubin GPU: - NVFP4 inference power of 50 PFLOPS, 5x that of previous-gen Blackwell - 336 billion transistors, 1.6x more than Blackwell - Equipped with third-generation Transformer engine, dynamically adjusting precision based on Transformer model needs  3. ConnectX-9 NIC: - 800 Gb/s Ethernet based on 200G PAM4 SerDes - Programmable RDMA and data path accelerator - Certified by CNSA and FIPS - 23 billion transistors

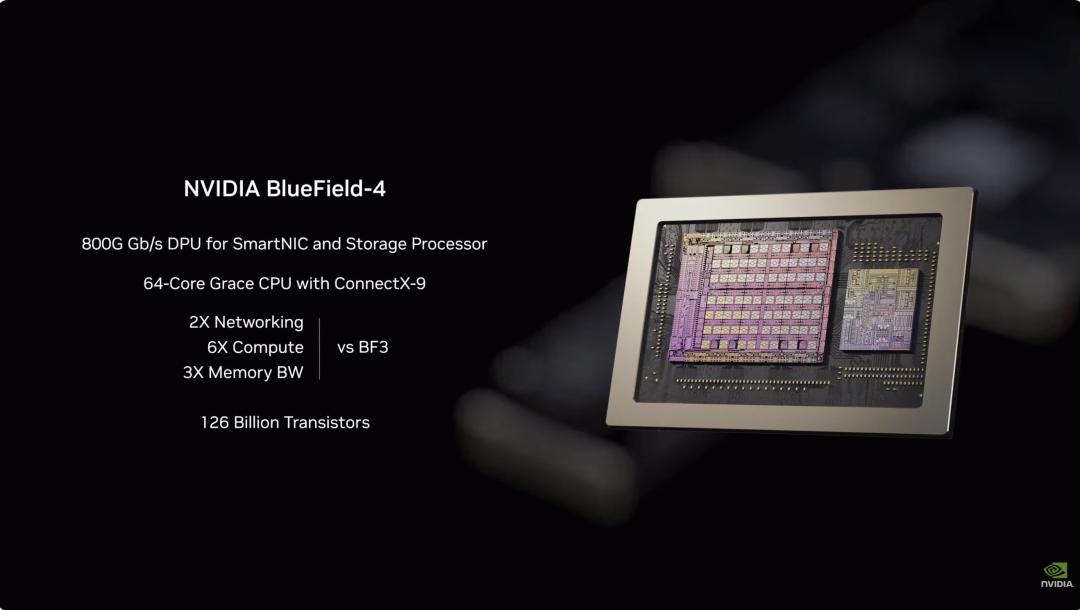

3. ConnectX-9 NIC: - 800 Gb/s Ethernet based on 200G PAM4 SerDes - Programmable RDMA and data path accelerator - Certified by CNSA and FIPS - 23 billion transistors  4. BlueField-4 DPU: - End-to-end engine built for next-gen AI storage platforms - 800G Gb/s DPU for SmartNIC and storage processors - 64-core Grace CPU paired with ConnectX-9 - 126 billion transistors

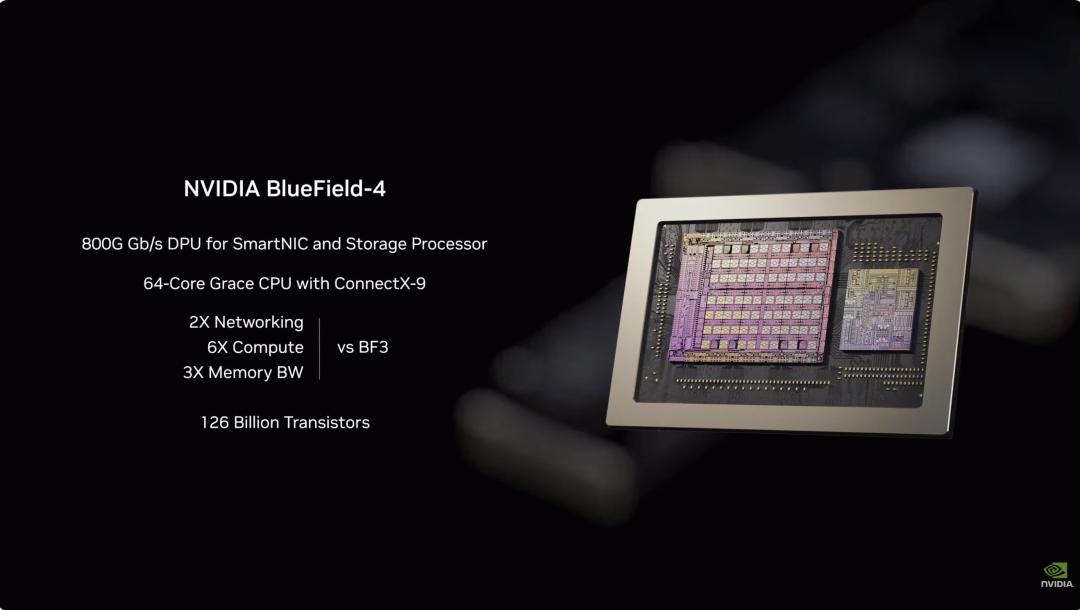

4. BlueField-4 DPU: - End-to-end engine built for next-gen AI storage platforms - 800G Gb/s DPU for SmartNIC and storage processors - 64-core Grace CPU paired with ConnectX-9 - 126 billion transistors  5. NVLink-6 Switch Chip: - Connects 18 compute nodes, supporting up to 72 Rubin GPUs to operate as a unified whole - Under NVLink 6 architecture, each GPU enjoys 3.6 TB/s all-to-all communication bandwidth - Uses 400G SerDes, supports In-Network SHARP Collectives, enabling collective communication directly within the switch network

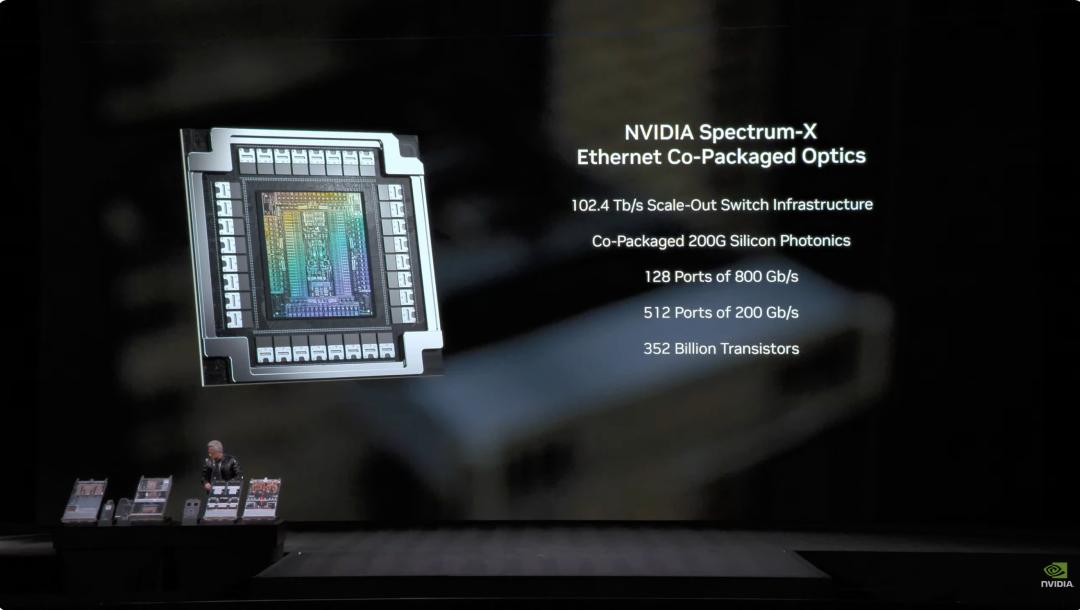

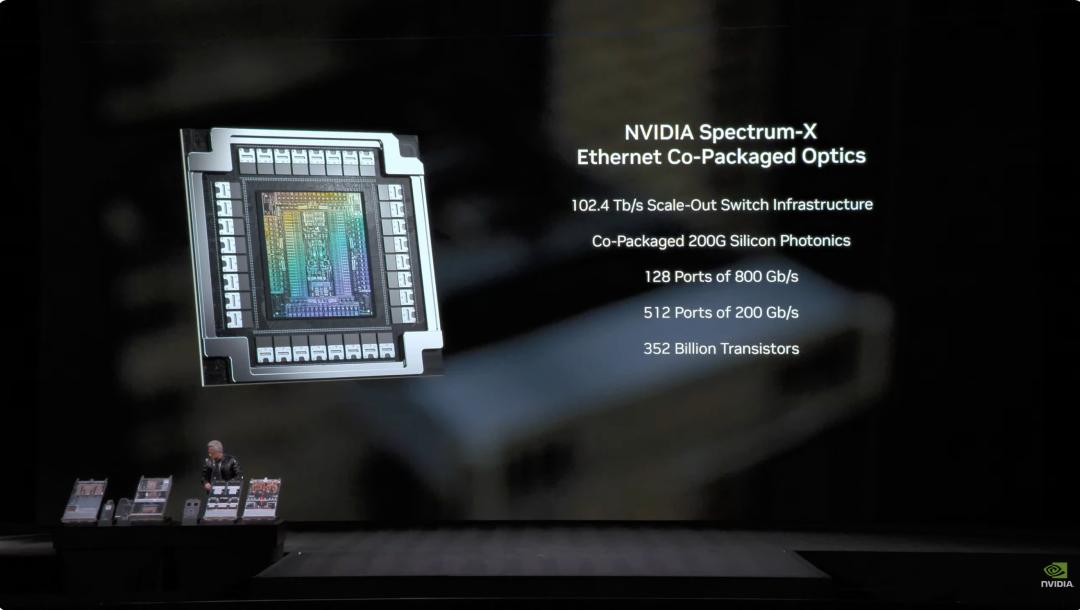

5. NVLink-6 Switch Chip: - Connects 18 compute nodes, supporting up to 72 Rubin GPUs to operate as a unified whole - Under NVLink 6 architecture, each GPU enjoys 3.6 TB/s all-to-all communication bandwidth - Uses 400G SerDes, supports In-Network SHARP Collectives, enabling collective communication directly within the switch network  6. Spectrum-6 Optical Ethernet Switch Chip - 512 channels, 200Gbps per channel, enabling faster data transfer - Integrated silicon photonics based on TSMC COOP process - Equipped with copackaged optics - 352 billion transistors

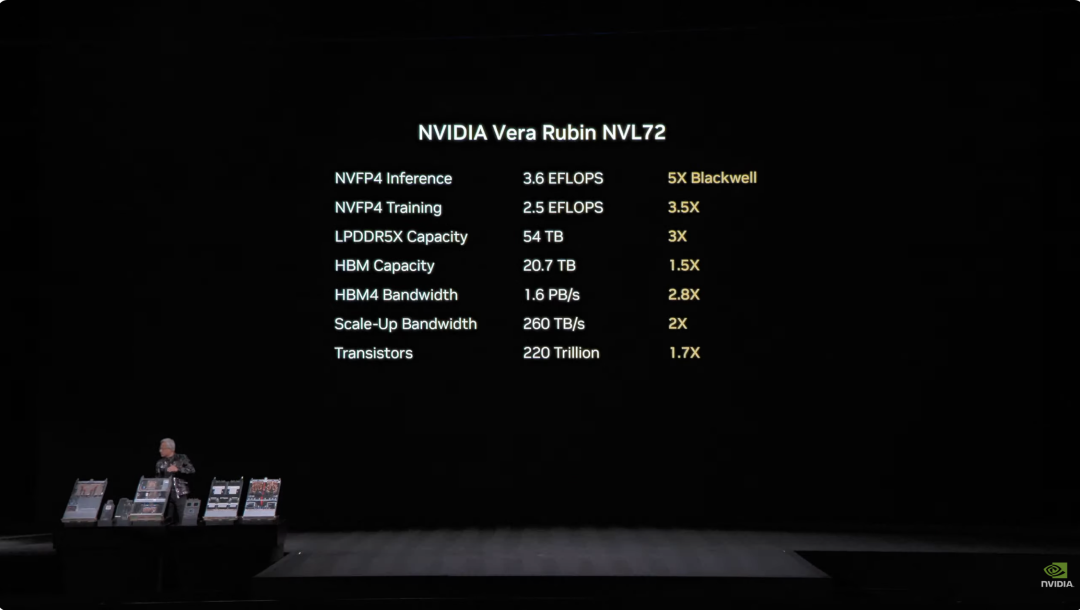

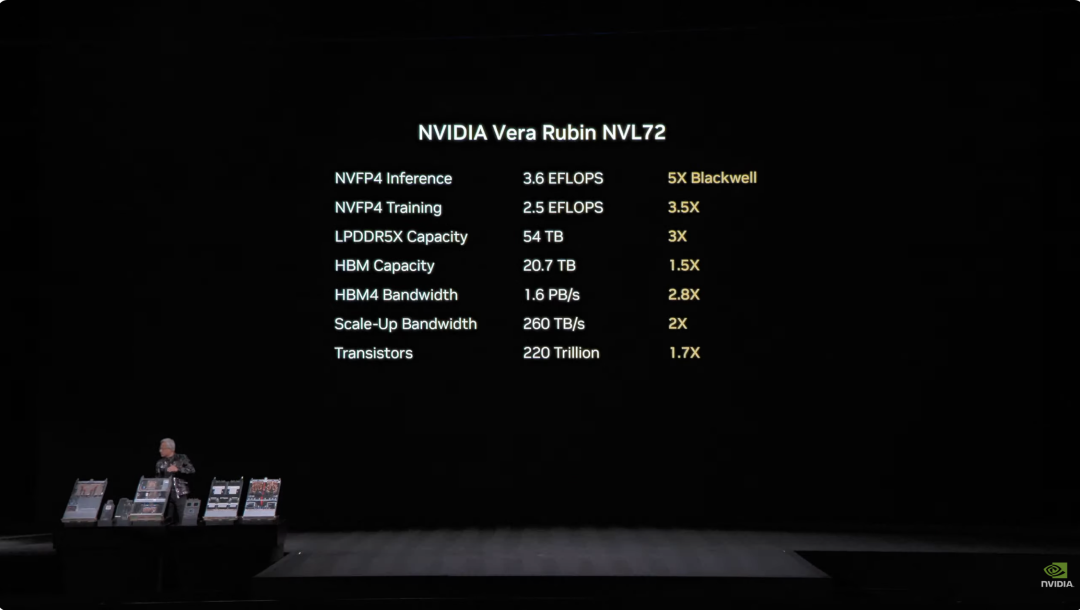

6. Spectrum-6 Optical Ethernet Switch Chip - 512 channels, 200Gbps per channel, enabling faster data transfer - Integrated silicon photonics based on TSMC COOP process - Equipped with copackaged optics - 352 billion transistors  With deep integration of these six chips, the Vera Rubin NVL72 system delivers comprehensive improvements over the previous-gen Blackwell. In NVFP4 inference tasks, this chip achieves an impressive 3.6 EFLOPS, five times the previous Blackwell architecture. For NVFP4 training, performance reaches 2.5 EFLOPS, a 3.5x increase. In storage capacity, NVL72 is equipped with 54TB of LPDDR5X memory, 3x that of the previous generation. HBM (high-bandwidth memory) capacity reaches 20.7TB, a 1.5x increase. In bandwidth, HBM4 bandwidth reaches 1.6 PB/s, 2.8x up; scale-up bandwidth hits 260 TB/s, a 2x growth. Despite such massive performance improvements, transistor count increased only 1.7x to 220 trillion, demonstrating innovation in semiconductor manufacturing.

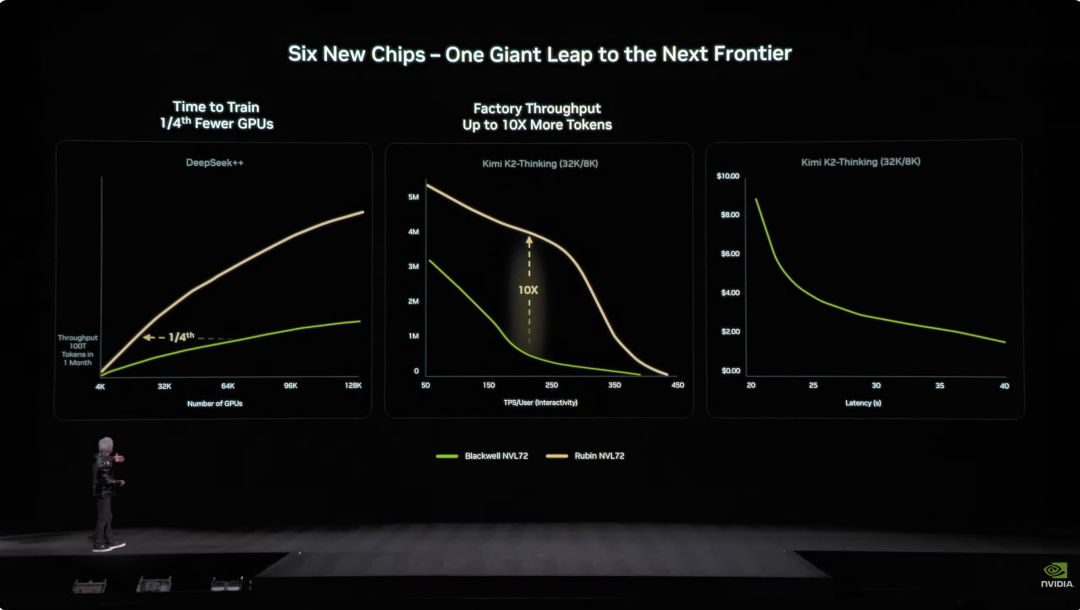

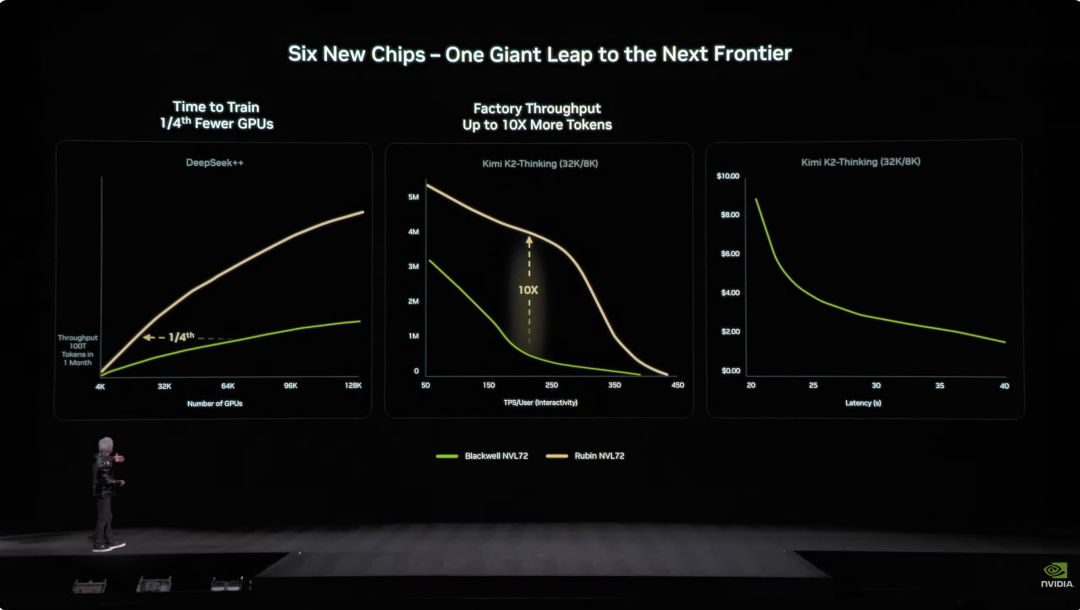

With deep integration of these six chips, the Vera Rubin NVL72 system delivers comprehensive improvements over the previous-gen Blackwell. In NVFP4 inference tasks, this chip achieves an impressive 3.6 EFLOPS, five times the previous Blackwell architecture. For NVFP4 training, performance reaches 2.5 EFLOPS, a 3.5x increase. In storage capacity, NVL72 is equipped with 54TB of LPDDR5X memory, 3x that of the previous generation. HBM (high-bandwidth memory) capacity reaches 20.7TB, a 1.5x increase. In bandwidth, HBM4 bandwidth reaches 1.6 PB/s, 2.8x up; scale-up bandwidth hits 260 TB/s, a 2x growth. Despite such massive performance improvements, transistor count increased only 1.7x to 220 trillion, demonstrating innovation in semiconductor manufacturing.  In engineering design, Vera Rubin also brings technological breakthroughs. Previously, a supercomputer node needed 43 cables, two hours for assembly, and mistakes were common. Now, Vera Rubin nodes use zero cables, only six liquid cooling tubes, done in five minutes. Even more impressive, the rack's rear is filled with almost 3.2 kilometers of copper cable—5,000 cables forming the NVLink backbone network, achieving 400Gbps speeds. In Jensen's words: "It probably weighs several hundred pounds. You have to be a pretty fit CEO to handle this job." In the AI world, time is money. A key stat: training a 10 trillion-parameter model, Rubin only needs a quarter the number of Blackwell systems; generating one token costs about one-tenth that of Blackwell.

In engineering design, Vera Rubin also brings technological breakthroughs. Previously, a supercomputer node needed 43 cables, two hours for assembly, and mistakes were common. Now, Vera Rubin nodes use zero cables, only six liquid cooling tubes, done in five minutes. Even more impressive, the rack's rear is filled with almost 3.2 kilometers of copper cable—5,000 cables forming the NVLink backbone network, achieving 400Gbps speeds. In Jensen's words: "It probably weighs several hundred pounds. You have to be a pretty fit CEO to handle this job." In the AI world, time is money. A key stat: training a 10 trillion-parameter model, Rubin only needs a quarter the number of Blackwell systems; generating one token costs about one-tenth that of Blackwell.  Moreover, while Rubin's power consumption is twice that of Grace Blackwell, its performance gains far outpace power growth: 5x boost in inference, 3.5x in training performance. More importantly, Rubin's throughput (AI tokens per watt per dollar) is 10x higher than Blackwell. For a $5 billion, gigawatt-scale data center, this means revenue potential will directly double. The biggest pain point in the AI industry used to be insufficient context memory. Specifically, AI generates "KV Cache" (key-value cache) as its working memory. As conversations grow and models scale up, HBM memory becomes a bottleneck.

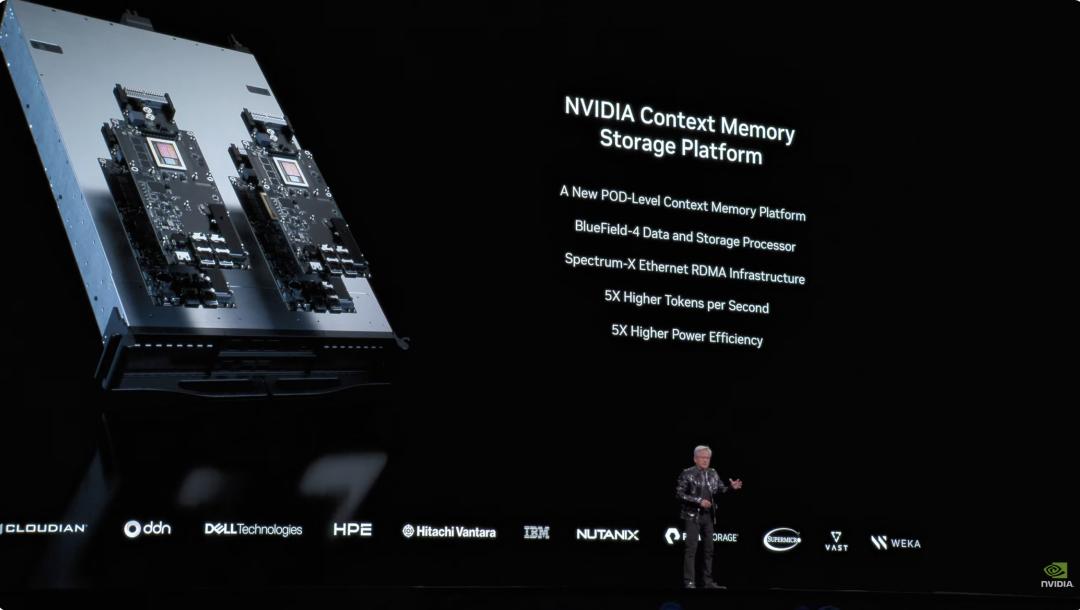

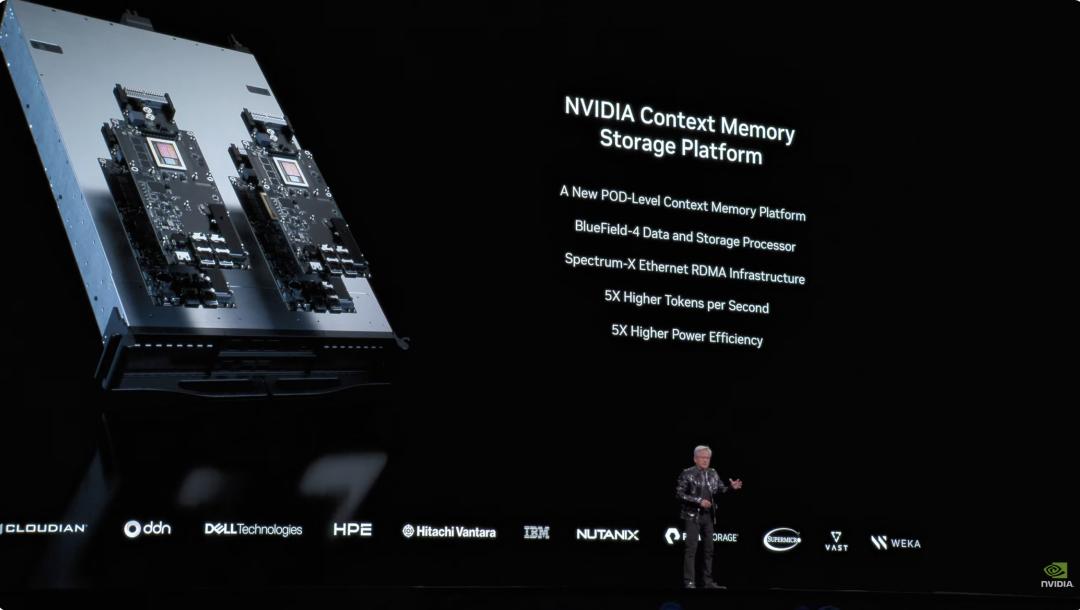

Moreover, while Rubin's power consumption is twice that of Grace Blackwell, its performance gains far outpace power growth: 5x boost in inference, 3.5x in training performance. More importantly, Rubin's throughput (AI tokens per watt per dollar) is 10x higher than Blackwell. For a $5 billion, gigawatt-scale data center, this means revenue potential will directly double. The biggest pain point in the AI industry used to be insufficient context memory. Specifically, AI generates "KV Cache" (key-value cache) as its working memory. As conversations grow and models scale up, HBM memory becomes a bottleneck.  Last year, NVIDIA introduced the Grace-Blackwell architecture to expand memory, but it still wasn't enough. Vera Rubin's solution: deploy BlueField-4 processors in the rack to manage KV Cache. Each node is equipped with four BlueField-4s, each backed by 150TB of context memory, distributed to the GPUs, so each GPU gets an extra 16TB—while the GPU itself has only about 1TB on board. Crucially, bandwidth remains at 200Gbps, with no speed loss. But capacity isn't enough. To make the "sticky notes" distributed across dozens of racks and thousands of GPUs work as if they were a single memory, the network must be "big enough, fast enough, and stable enough." That's where Spectrum-X comes in. Spectrum-X is the world's first "purpose-built for generative AI" end-to-end Ethernet networking platform from NVIDIA. The latest generation uses the TSMC COOP process with integrated silicon photonics, 512 channels × 200Gbps. Jensen did the math: for a $5 billion, gigawatt-scale data center, Spectrum-X delivers a 25% throughput boost, equivalent to saving $500 million. "You could say this network system is almost 'free.'" In terms of security, Vera Rubin also supports Confidential Computing. All data is encrypted throughout transmission, storage, and computation—including PCIe channels, NVLink, CPU-GPU communication, and all buses. Companies can safely deploy their models to external systems without worrying about data leaks. DeepSeek shocked the world: open source and agents are mainstream in AI After the main event, back to the start of the keynote. Jensen opened with a stunning number: about $10 trillion of computing resources invested over the past decade are being completely modernized. But it's not just a hardware upgrade—it’s a paradigm shift in software. He especially highlighted agentic models with autonomous behavior, and named Cursor, which has completely changed programming at NVIDIA.

Last year, NVIDIA introduced the Grace-Blackwell architecture to expand memory, but it still wasn't enough. Vera Rubin's solution: deploy BlueField-4 processors in the rack to manage KV Cache. Each node is equipped with four BlueField-4s, each backed by 150TB of context memory, distributed to the GPUs, so each GPU gets an extra 16TB—while the GPU itself has only about 1TB on board. Crucially, bandwidth remains at 200Gbps, with no speed loss. But capacity isn't enough. To make the "sticky notes" distributed across dozens of racks and thousands of GPUs work as if they were a single memory, the network must be "big enough, fast enough, and stable enough." That's where Spectrum-X comes in. Spectrum-X is the world's first "purpose-built for generative AI" end-to-end Ethernet networking platform from NVIDIA. The latest generation uses the TSMC COOP process with integrated silicon photonics, 512 channels × 200Gbps. Jensen did the math: for a $5 billion, gigawatt-scale data center, Spectrum-X delivers a 25% throughput boost, equivalent to saving $500 million. "You could say this network system is almost 'free.'" In terms of security, Vera Rubin also supports Confidential Computing. All data is encrypted throughout transmission, storage, and computation—including PCIe channels, NVLink, CPU-GPU communication, and all buses. Companies can safely deploy their models to external systems without worrying about data leaks. DeepSeek shocked the world: open source and agents are mainstream in AI After the main event, back to the start of the keynote. Jensen opened with a stunning number: about $10 trillion of computing resources invested over the past decade are being completely modernized. But it's not just a hardware upgrade—it’s a paradigm shift in software. He especially highlighted agentic models with autonomous behavior, and named Cursor, which has completely changed programming at NVIDIA.  The most exciting moment was his high praise for the open source community. Jensen said last year's breakthrough DeepSeek V1 surprised the world. As the first open source inference system, it directly set off a wave of industry development. On the PPT slide, we can see familiar Chinese players Kimi k2 and DeepSeek V3.2 as the top two open source projects. Jensen believes open source models may currently lag the very top models by about six months, but every six months a new model emerges. This iteration speed means no startups, tech giants, or researchers—NVIDIA included—want to miss out. So, this time they're not just selling shovels and pitching graphics cards; NVIDIA has built the multi-billion-dollar DGX Cloud supercomputer and developed cutting-edge models like La Proteina (for protein synthesis) and OpenFold 3.

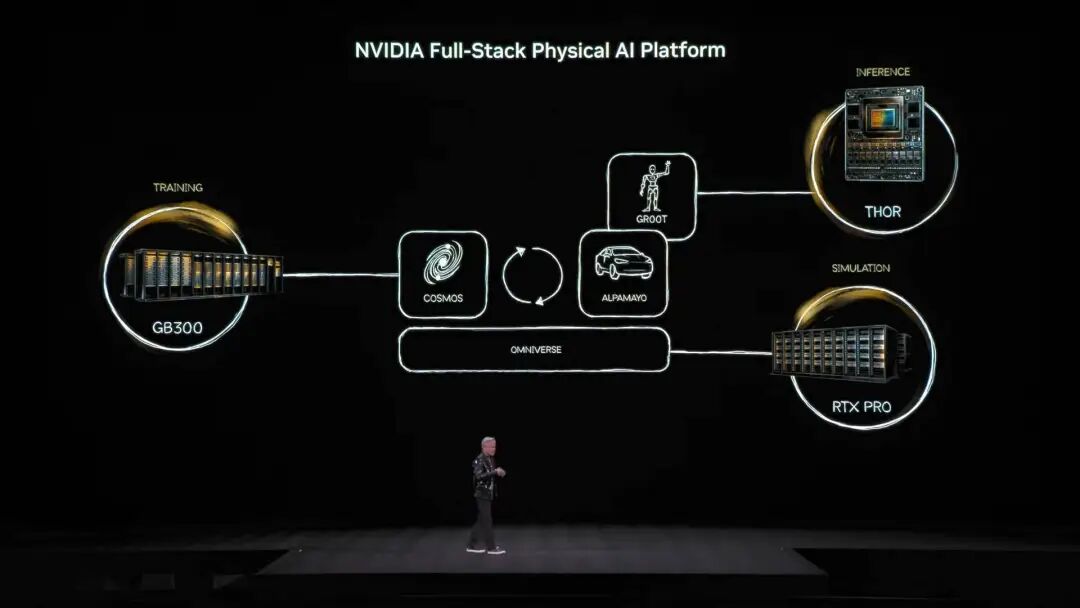

The most exciting moment was his high praise for the open source community. Jensen said last year's breakthrough DeepSeek V1 surprised the world. As the first open source inference system, it directly set off a wave of industry development. On the PPT slide, we can see familiar Chinese players Kimi k2 and DeepSeek V3.2 as the top two open source projects. Jensen believes open source models may currently lag the very top models by about six months, but every six months a new model emerges. This iteration speed means no startups, tech giants, or researchers—NVIDIA included—want to miss out. So, this time they're not just selling shovels and pitching graphics cards; NVIDIA has built the multi-billion-dollar DGX Cloud supercomputer and developed cutting-edge models like La Proteina (for protein synthesis) and OpenFold 3.  NVIDIA's open source model ecosystem covers biomedicine, physical AI, agent models, robotics, and autonomous driving The NVIDIA Nemotron model family’s various open source models were also highlights of the keynote. These cover speech, multimodal, retrieval-augmented generation, safety, and other areas. Jensen mentioned that Nemotron open source models performed well on multiple leaderboards and are being widely adopted by enterprises. What is physical AI? Dozens of models launched at once If large language models solve the "digital world," NVIDIA's next ambition is clearly to conquer the "physical world." Jensen said that for AI to understand physical laws and exist in the real world, data is extremely scarce. Besides the agent open source model Nemotron, he proposed the core architecture of "three computers" for building physical AI.

NVIDIA's open source model ecosystem covers biomedicine, physical AI, agent models, robotics, and autonomous driving The NVIDIA Nemotron model family’s various open source models were also highlights of the keynote. These cover speech, multimodal, retrieval-augmented generation, safety, and other areas. Jensen mentioned that Nemotron open source models performed well on multiple leaderboards and are being widely adopted by enterprises. What is physical AI? Dozens of models launched at once If large language models solve the "digital world," NVIDIA's next ambition is clearly to conquer the "physical world." Jensen said that for AI to understand physical laws and exist in the real world, data is extremely scarce. Besides the agent open source model Nemotron, he proposed the core architecture of "three computers" for building physical AI.

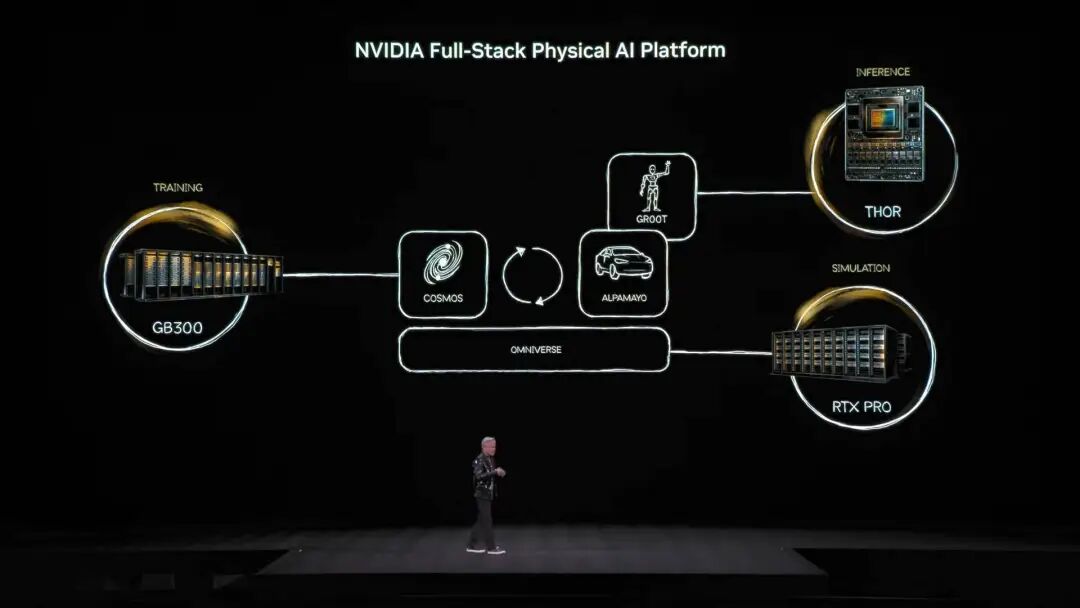

The training computer, which we know as machines built from various training-grade GPUs, like the GB300 architecture shown in the image.

The inference computer, the "cerebellum" running at the robot or vehicle edge, responsible for real-time execution.

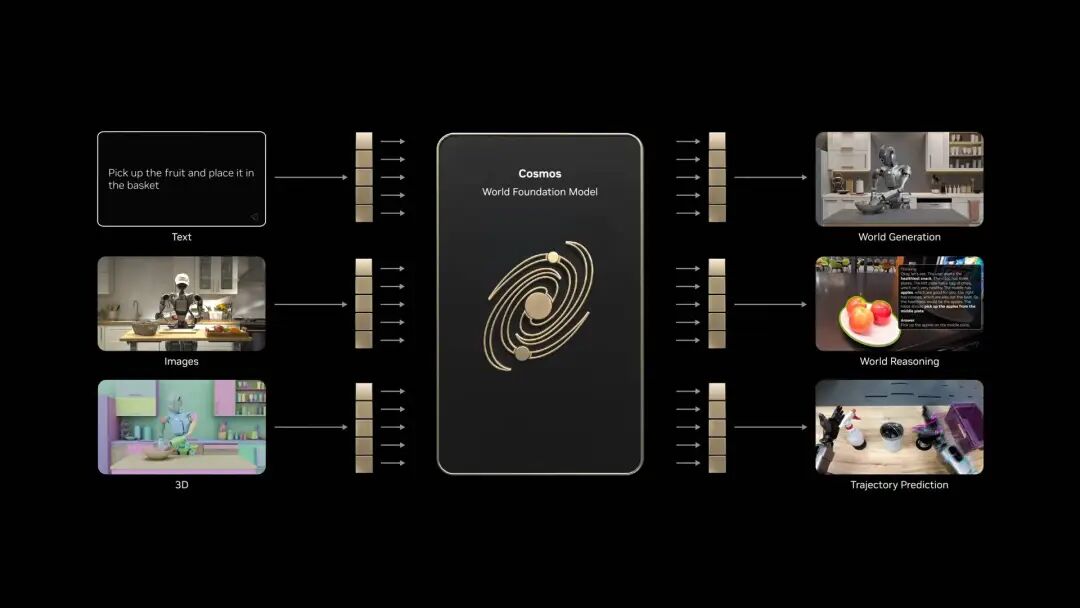

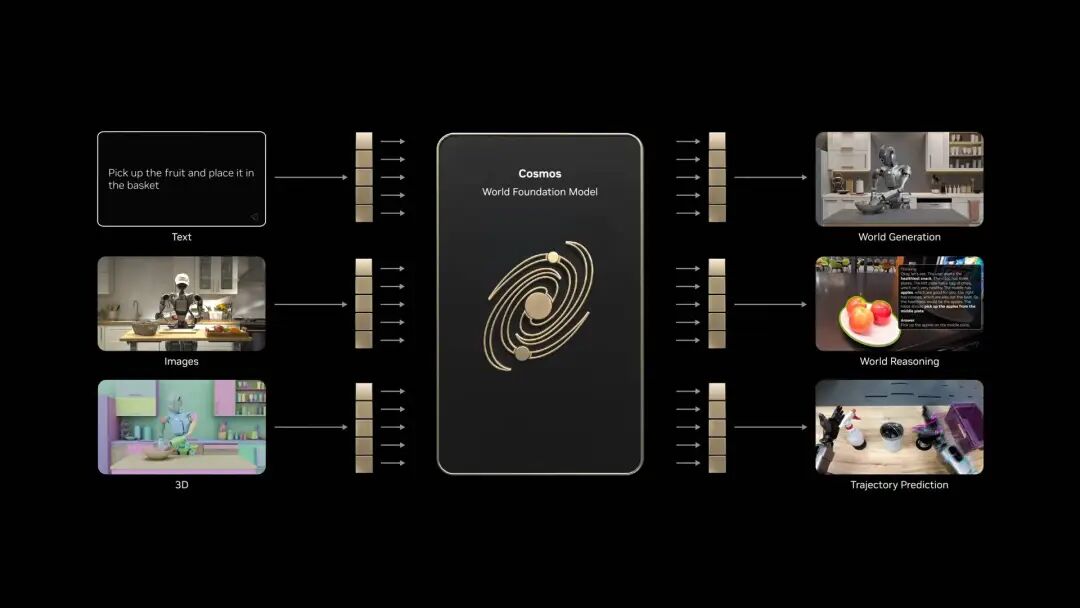

The simulation computer, including Omniverse and Cosmos, providing AI with a virtual training environment to learn physical feedback through simulation. The Cosmos system can generate massive amounts of physical-world AI training environments Based on this architecture, Jensen officially launched the show-stopping Alpamayo, the world’s first autonomous driving model with reasoning and inference abilities.

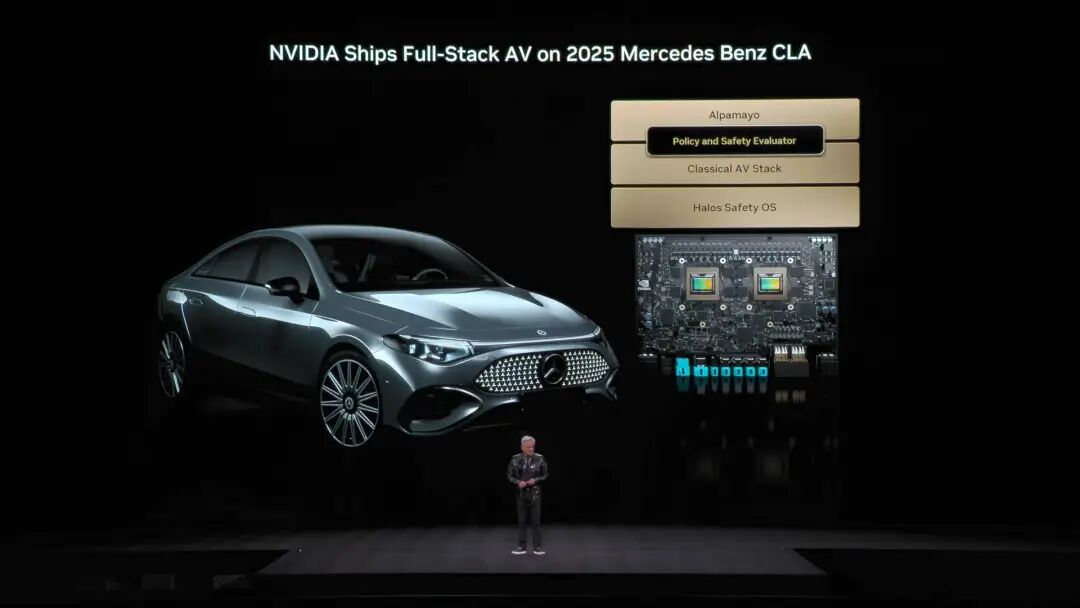

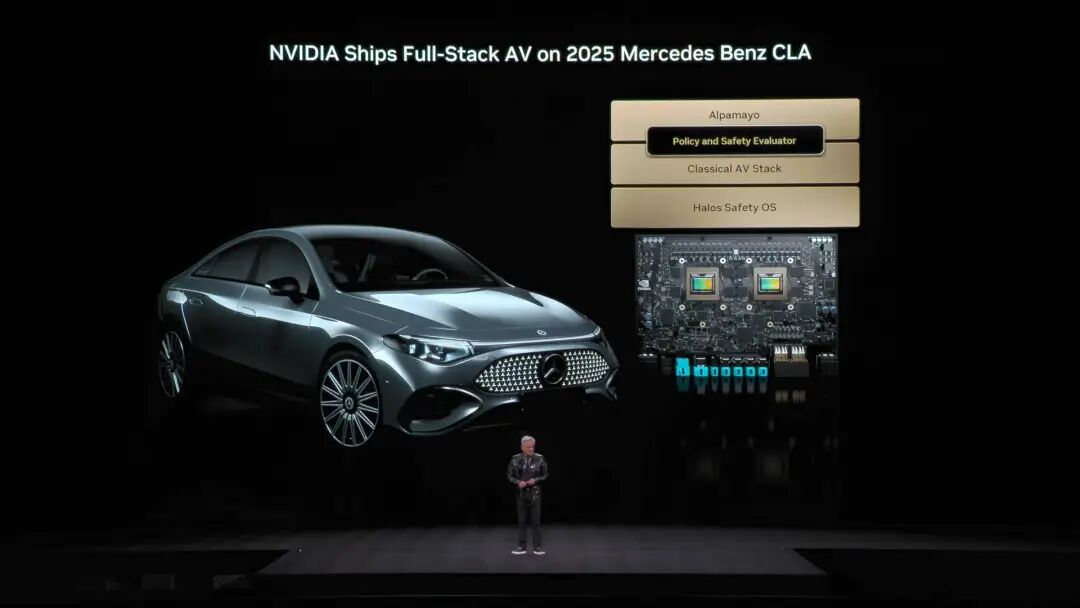

The Cosmos system can generate massive amounts of physical-world AI training environments Based on this architecture, Jensen officially launched the show-stopping Alpamayo, the world’s first autonomous driving model with reasoning and inference abilities.  Unlike traditional autonomous driving, Alpamayo is an end-to-end trained system. Its breakthrough is in solving the "long tail problem" of autonomous driving. When facing complex, never-seen-before road conditions, Alpamayo doesn't rigidly execute code but reasons like a human driver. "It will tell you what it’s going to do next, and why it made that decision." In the demo, the vehicle drove with astonishing naturalness, breaking down extremely complex scenarios into basic common sense. Beyond the demo, this isn't just theory. Jensen announced that the Mercedes CLA, equipped with the Alpamayo tech stack, will officially launch in the US in Q1 this year, followed by Europe and Asia.

Unlike traditional autonomous driving, Alpamayo is an end-to-end trained system. Its breakthrough is in solving the "long tail problem" of autonomous driving. When facing complex, never-seen-before road conditions, Alpamayo doesn't rigidly execute code but reasons like a human driver. "It will tell you what it’s going to do next, and why it made that decision." In the demo, the vehicle drove with astonishing naturalness, breaking down extremely complex scenarios into basic common sense. Beyond the demo, this isn't just theory. Jensen announced that the Mercedes CLA, equipped with the Alpamayo tech stack, will officially launch in the US in Q1 this year, followed by Europe and Asia.  This car was rated the world’s safest by NCAP, thanks to NVIDIA's unique "dual safety stack" design. If the end-to-end AI model is uncertain about road conditions, the system instantly switches to a traditional, more reliable safety mode to ensure absolute safety. At the launch, Jensen also showcased NVIDIA’s robotics strategy.

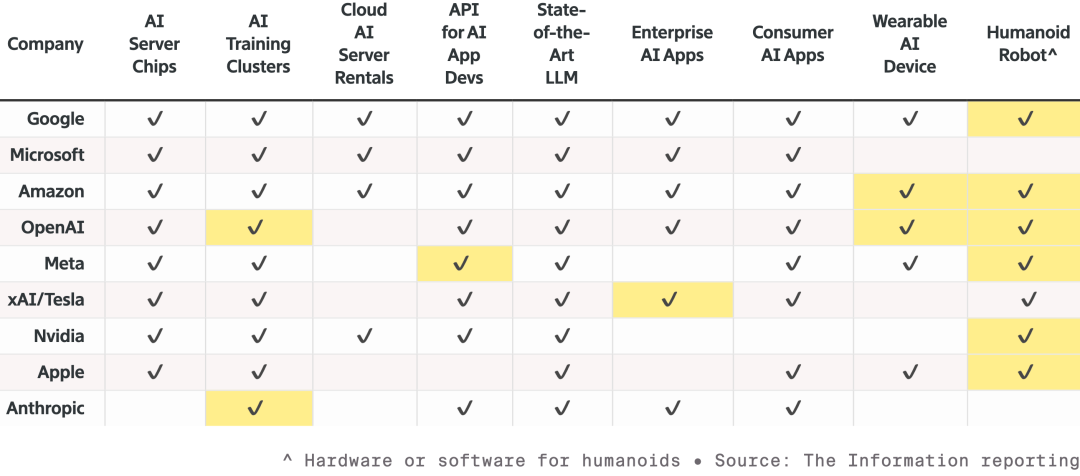

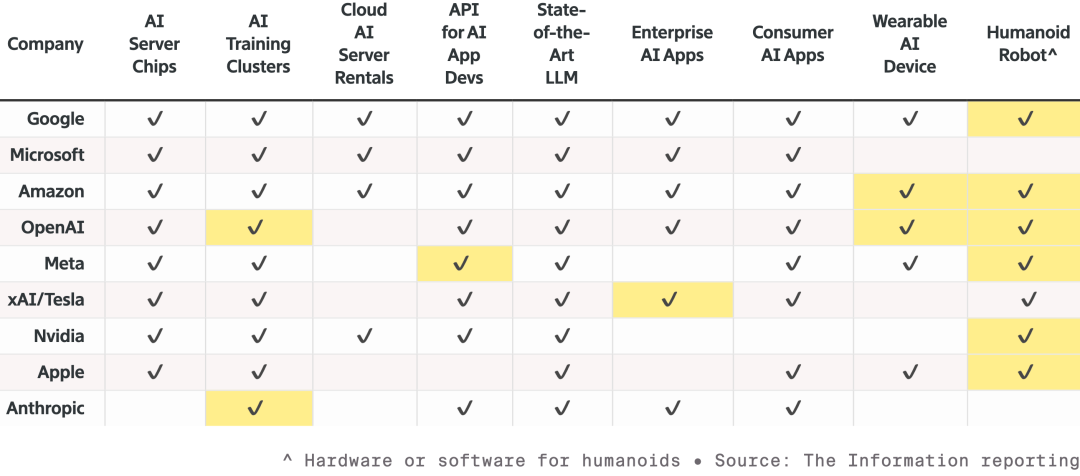

This car was rated the world’s safest by NCAP, thanks to NVIDIA's unique "dual safety stack" design. If the end-to-end AI model is uncertain about road conditions, the system instantly switches to a traditional, more reliable safety mode to ensure absolute safety. At the launch, Jensen also showcased NVIDIA’s robotics strategy.  The competition among nine top AI and related hardware manufacturers—all expanding product lines and vying for the robotics sector. The highlighted cells show new products since last year. All robots will be equipped with Jetson mini computers, trained in the Isaac simulator on the Omniverse platform. NVIDIA is also integrating this technology into industrial systems like Synopsys, Cadence, and Siemens.

The competition among nine top AI and related hardware manufacturers—all expanding product lines and vying for the robotics sector. The highlighted cells show new products since last year. All robots will be equipped with Jetson mini computers, trained in the Isaac simulator on the Omniverse platform. NVIDIA is also integrating this technology into industrial systems like Synopsys, Cadence, and Siemens.  Jensen invited humanoid and quadruped robots from Boston Dynamics, Agility, and others onstage, emphasizing that the biggest robot is actually the factory itself. From the ground up, NVIDIA’s vision is that future chip design, system design, and factory simulation will all be accelerated by NVIDIA’s physical AI. Once again, Disney robots made a dazzling appearance at the launch. Jensen joked to these adorable robots: "You’ll be designed in the computer, manufactured in the computer, and even tested and verified in the computer before you ever face gravity in the real world."

Jensen invited humanoid and quadruped robots from Boston Dynamics, Agility, and others onstage, emphasizing that the biggest robot is actually the factory itself. From the ground up, NVIDIA’s vision is that future chip design, system design, and factory simulation will all be accelerated by NVIDIA’s physical AI. Once again, Disney robots made a dazzling appearance at the launch. Jensen joked to these adorable robots: "You’ll be designed in the computer, manufactured in the computer, and even tested and verified in the computer before you ever face gravity in the real world."  If it weren’t for Jensen Huang, the whole keynote would feel like a model vendor’s product launch. With debates about an AI bubble running high these days—and Moore’s Law slowing—Jensen seems eager to use real AI capabilities to boost everyone’s confidence in AI. Besides unveiling the powerful new Vera Rubin AI supercomputing platform to satisfy the hunger for computing power, he spent more effort than ever on applications and software, doing everything possible to show us the tangible changes AI will bring. As Jensen himself said, in the past they made chips for the virtual world; now they’re stepping up and demonstrating, focusing on physical AI represented by autonomous driving and humanoid robots, and entering the more fiercely competitive real physical world. After all, only when the fight starts, can the arms trade keep going. *Finally, here’s the surprise video: Due to time limits at CES, Jensen didn’t get to present many of his PPT slides. So, he simply made a funny short video with all the slides he didn’t show. Enjoy⬇️

If it weren’t for Jensen Huang, the whole keynote would feel like a model vendor’s product launch. With debates about an AI bubble running high these days—and Moore’s Law slowing—Jensen seems eager to use real AI capabilities to boost everyone’s confidence in AI. Besides unveiling the powerful new Vera Rubin AI supercomputing platform to satisfy the hunger for computing power, he spent more effort than ever on applications and software, doing everything possible to show us the tangible changes AI will bring. As Jensen himself said, in the past they made chips for the virtual world; now they’re stepping up and demonstrating, focusing on physical AI represented by autonomous driving and humanoid robots, and entering the more fiercely competitive real physical world. After all, only when the fight starts, can the arms trade keep going. *Finally, here’s the surprise video: Due to time limits at CES, Jensen didn’t get to present many of his PPT slides. So, he simply made a funny short video with all the slides he didn’t show. Enjoy⬇️

Unlike last year's solo keynote, Jensen Huang in 2026 packed his schedule. From NVIDIA Live to the Siemens Industrial AI Dialogue, then to Lenovo TechWorld, he spanned three events in just 48 hours. Last time, he announced the RTX 50 series graphics cards at CES. This time, it was physical AI, robotics, and a " company-grade nuke " that stole the spotlight. The Vera Rubin computing platform debuts: the more you buy, the more you save During the launch, the ever-playful Jensen brought a 2.5-ton AI server rack onto the stage, introducing the focus of this event: the Vera Rubin computing platform. Named after the astronomer who discovered dark matter, it has a clear goal: to accelerate AI training and bring the next generation of models sooner.

Unlike last year's solo keynote, Jensen Huang in 2026 packed his schedule. From NVIDIA Live to the Siemens Industrial AI Dialogue, then to Lenovo TechWorld, he spanned three events in just 48 hours. Last time, he announced the RTX 50 series graphics cards at CES. This time, it was physical AI, robotics, and a " company-grade nuke " that stole the spotlight. The Vera Rubin computing platform debuts: the more you buy, the more you save During the launch, the ever-playful Jensen brought a 2.5-ton AI server rack onto the stage, introducing the focus of this event: the Vera Rubin computing platform. Named after the astronomer who discovered dark matter, it has a clear goal: to accelerate AI training and bring the next generation of models sooner.  Typically, NVIDIA has an internal rule: each generation only upgrades 1-2 chips at most. But this time, Vera Rubin broke the norm by redesigning six chips at once, all of which have already entered mass production.

Typically, NVIDIA has an internal rule: each generation only upgrades 1-2 chips at most. But this time, Vera Rubin broke the norm by redesigning six chips at once, all of which have already entered mass production. The reason: as Moore's Law slows, traditional performance improvements can't keep up with AI models' tenfold annual growth, so NVIDIA opted for "ultimate co-design"—innovating at every layer of every chip and across the entire platform simultaneously.

The six chips are: 1. Vera CPU: - 88 NVIDIA custom Olympus cores - Uses NVIDIA spatial multithreading technology, supporting 176 threads - NVLink C2C bandwidth of 1.8 TB/s - System memory 1.5 TB (3x that of Grace) - LPDDR5X bandwidth 1.2 TB/s - 22.7 billion transistors

The six chips are: 1. Vera CPU: - 88 NVIDIA custom Olympus cores - Uses NVIDIA spatial multithreading technology, supporting 176 threads - NVLink C2C bandwidth of 1.8 TB/s - System memory 1.5 TB (3x that of Grace) - LPDDR5X bandwidth 1.2 TB/s - 22.7 billion transistors  2. Rubin GPU: - NVFP4 inference power of 50 PFLOPS, 5x that of previous-gen Blackwell - 336 billion transistors, 1.6x more than Blackwell - Equipped with third-generation Transformer engine, dynamically adjusting precision based on Transformer model needs

2. Rubin GPU: - NVFP4 inference power of 50 PFLOPS, 5x that of previous-gen Blackwell - 336 billion transistors, 1.6x more than Blackwell - Equipped with third-generation Transformer engine, dynamically adjusting precision based on Transformer model needs  3. ConnectX-9 NIC: - 800 Gb/s Ethernet based on 200G PAM4 SerDes - Programmable RDMA and data path accelerator - Certified by CNSA and FIPS - 23 billion transistors

3. ConnectX-9 NIC: - 800 Gb/s Ethernet based on 200G PAM4 SerDes - Programmable RDMA and data path accelerator - Certified by CNSA and FIPS - 23 billion transistors  4. BlueField-4 DPU: - End-to-end engine built for next-gen AI storage platforms - 800G Gb/s DPU for SmartNIC and storage processors - 64-core Grace CPU paired with ConnectX-9 - 126 billion transistors

4. BlueField-4 DPU: - End-to-end engine built for next-gen AI storage platforms - 800G Gb/s DPU for SmartNIC and storage processors - 64-core Grace CPU paired with ConnectX-9 - 126 billion transistors  5. NVLink-6 Switch Chip: - Connects 18 compute nodes, supporting up to 72 Rubin GPUs to operate as a unified whole - Under NVLink 6 architecture, each GPU enjoys 3.6 TB/s all-to-all communication bandwidth - Uses 400G SerDes, supports In-Network SHARP Collectives, enabling collective communication directly within the switch network

5. NVLink-6 Switch Chip: - Connects 18 compute nodes, supporting up to 72 Rubin GPUs to operate as a unified whole - Under NVLink 6 architecture, each GPU enjoys 3.6 TB/s all-to-all communication bandwidth - Uses 400G SerDes, supports In-Network SHARP Collectives, enabling collective communication directly within the switch network  6. Spectrum-6 Optical Ethernet Switch Chip - 512 channels, 200Gbps per channel, enabling faster data transfer - Integrated silicon photonics based on TSMC COOP process - Equipped with copackaged optics - 352 billion transistors

6. Spectrum-6 Optical Ethernet Switch Chip - 512 channels, 200Gbps per channel, enabling faster data transfer - Integrated silicon photonics based on TSMC COOP process - Equipped with copackaged optics - 352 billion transistors  With deep integration of these six chips, the Vera Rubin NVL72 system delivers comprehensive improvements over the previous-gen Blackwell. In NVFP4 inference tasks, this chip achieves an impressive 3.6 EFLOPS, five times the previous Blackwell architecture. For NVFP4 training, performance reaches 2.5 EFLOPS, a 3.5x increase. In storage capacity, NVL72 is equipped with 54TB of LPDDR5X memory, 3x that of the previous generation. HBM (high-bandwidth memory) capacity reaches 20.7TB, a 1.5x increase. In bandwidth, HBM4 bandwidth reaches 1.6 PB/s, 2.8x up; scale-up bandwidth hits 260 TB/s, a 2x growth. Despite such massive performance improvements, transistor count increased only 1.7x to 220 trillion, demonstrating innovation in semiconductor manufacturing.

With deep integration of these six chips, the Vera Rubin NVL72 system delivers comprehensive improvements over the previous-gen Blackwell. In NVFP4 inference tasks, this chip achieves an impressive 3.6 EFLOPS, five times the previous Blackwell architecture. For NVFP4 training, performance reaches 2.5 EFLOPS, a 3.5x increase. In storage capacity, NVL72 is equipped with 54TB of LPDDR5X memory, 3x that of the previous generation. HBM (high-bandwidth memory) capacity reaches 20.7TB, a 1.5x increase. In bandwidth, HBM4 bandwidth reaches 1.6 PB/s, 2.8x up; scale-up bandwidth hits 260 TB/s, a 2x growth. Despite such massive performance improvements, transistor count increased only 1.7x to 220 trillion, demonstrating innovation in semiconductor manufacturing.  In engineering design, Vera Rubin also brings technological breakthroughs. Previously, a supercomputer node needed 43 cables, two hours for assembly, and mistakes were common. Now, Vera Rubin nodes use zero cables, only six liquid cooling tubes, done in five minutes. Even more impressive, the rack's rear is filled with almost 3.2 kilometers of copper cable—5,000 cables forming the NVLink backbone network, achieving 400Gbps speeds. In Jensen's words: "It probably weighs several hundred pounds. You have to be a pretty fit CEO to handle this job." In the AI world, time is money. A key stat: training a 10 trillion-parameter model, Rubin only needs a quarter the number of Blackwell systems; generating one token costs about one-tenth that of Blackwell.

In engineering design, Vera Rubin also brings technological breakthroughs. Previously, a supercomputer node needed 43 cables, two hours for assembly, and mistakes were common. Now, Vera Rubin nodes use zero cables, only six liquid cooling tubes, done in five minutes. Even more impressive, the rack's rear is filled with almost 3.2 kilometers of copper cable—5,000 cables forming the NVLink backbone network, achieving 400Gbps speeds. In Jensen's words: "It probably weighs several hundred pounds. You have to be a pretty fit CEO to handle this job." In the AI world, time is money. A key stat: training a 10 trillion-parameter model, Rubin only needs a quarter the number of Blackwell systems; generating one token costs about one-tenth that of Blackwell.  Moreover, while Rubin's power consumption is twice that of Grace Blackwell, its performance gains far outpace power growth: 5x boost in inference, 3.5x in training performance. More importantly, Rubin's throughput (AI tokens per watt per dollar) is 10x higher than Blackwell. For a $5 billion, gigawatt-scale data center, this means revenue potential will directly double. The biggest pain point in the AI industry used to be insufficient context memory. Specifically, AI generates "KV Cache" (key-value cache) as its working memory. As conversations grow and models scale up, HBM memory becomes a bottleneck.

Moreover, while Rubin's power consumption is twice that of Grace Blackwell, its performance gains far outpace power growth: 5x boost in inference, 3.5x in training performance. More importantly, Rubin's throughput (AI tokens per watt per dollar) is 10x higher than Blackwell. For a $5 billion, gigawatt-scale data center, this means revenue potential will directly double. The biggest pain point in the AI industry used to be insufficient context memory. Specifically, AI generates "KV Cache" (key-value cache) as its working memory. As conversations grow and models scale up, HBM memory becomes a bottleneck.  Last year, NVIDIA introduced the Grace-Blackwell architecture to expand memory, but it still wasn't enough. Vera Rubin's solution: deploy BlueField-4 processors in the rack to manage KV Cache. Each node is equipped with four BlueField-4s, each backed by 150TB of context memory, distributed to the GPUs, so each GPU gets an extra 16TB—while the GPU itself has only about 1TB on board. Crucially, bandwidth remains at 200Gbps, with no speed loss. But capacity isn't enough. To make the "sticky notes" distributed across dozens of racks and thousands of GPUs work as if they were a single memory, the network must be "big enough, fast enough, and stable enough." That's where Spectrum-X comes in. Spectrum-X is the world's first "purpose-built for generative AI" end-to-end Ethernet networking platform from NVIDIA. The latest generation uses the TSMC COOP process with integrated silicon photonics, 512 channels × 200Gbps. Jensen did the math: for a $5 billion, gigawatt-scale data center, Spectrum-X delivers a 25% throughput boost, equivalent to saving $500 million. "You could say this network system is almost 'free.'" In terms of security, Vera Rubin also supports Confidential Computing. All data is encrypted throughout transmission, storage, and computation—including PCIe channels, NVLink, CPU-GPU communication, and all buses. Companies can safely deploy their models to external systems without worrying about data leaks. DeepSeek shocked the world: open source and agents are mainstream in AI After the main event, back to the start of the keynote. Jensen opened with a stunning number: about $10 trillion of computing resources invested over the past decade are being completely modernized. But it's not just a hardware upgrade—it’s a paradigm shift in software. He especially highlighted agentic models with autonomous behavior, and named Cursor, which has completely changed programming at NVIDIA.

Last year, NVIDIA introduced the Grace-Blackwell architecture to expand memory, but it still wasn't enough. Vera Rubin's solution: deploy BlueField-4 processors in the rack to manage KV Cache. Each node is equipped with four BlueField-4s, each backed by 150TB of context memory, distributed to the GPUs, so each GPU gets an extra 16TB—while the GPU itself has only about 1TB on board. Crucially, bandwidth remains at 200Gbps, with no speed loss. But capacity isn't enough. To make the "sticky notes" distributed across dozens of racks and thousands of GPUs work as if they were a single memory, the network must be "big enough, fast enough, and stable enough." That's where Spectrum-X comes in. Spectrum-X is the world's first "purpose-built for generative AI" end-to-end Ethernet networking platform from NVIDIA. The latest generation uses the TSMC COOP process with integrated silicon photonics, 512 channels × 200Gbps. Jensen did the math: for a $5 billion, gigawatt-scale data center, Spectrum-X delivers a 25% throughput boost, equivalent to saving $500 million. "You could say this network system is almost 'free.'" In terms of security, Vera Rubin also supports Confidential Computing. All data is encrypted throughout transmission, storage, and computation—including PCIe channels, NVLink, CPU-GPU communication, and all buses. Companies can safely deploy their models to external systems without worrying about data leaks. DeepSeek shocked the world: open source and agents are mainstream in AI After the main event, back to the start of the keynote. Jensen opened with a stunning number: about $10 trillion of computing resources invested over the past decade are being completely modernized. But it's not just a hardware upgrade—it’s a paradigm shift in software. He especially highlighted agentic models with autonomous behavior, and named Cursor, which has completely changed programming at NVIDIA.  The most exciting moment was his high praise for the open source community. Jensen said last year's breakthrough DeepSeek V1 surprised the world. As the first open source inference system, it directly set off a wave of industry development. On the PPT slide, we can see familiar Chinese players Kimi k2 and DeepSeek V3.2 as the top two open source projects. Jensen believes open source models may currently lag the very top models by about six months, but every six months a new model emerges. This iteration speed means no startups, tech giants, or researchers—NVIDIA included—want to miss out. So, this time they're not just selling shovels and pitching graphics cards; NVIDIA has built the multi-billion-dollar DGX Cloud supercomputer and developed cutting-edge models like La Proteina (for protein synthesis) and OpenFold 3.

The most exciting moment was his high praise for the open source community. Jensen said last year's breakthrough DeepSeek V1 surprised the world. As the first open source inference system, it directly set off a wave of industry development. On the PPT slide, we can see familiar Chinese players Kimi k2 and DeepSeek V3.2 as the top two open source projects. Jensen believes open source models may currently lag the very top models by about six months, but every six months a new model emerges. This iteration speed means no startups, tech giants, or researchers—NVIDIA included—want to miss out. So, this time they're not just selling shovels and pitching graphics cards; NVIDIA has built the multi-billion-dollar DGX Cloud supercomputer and developed cutting-edge models like La Proteina (for protein synthesis) and OpenFold 3.  NVIDIA's open source model ecosystem covers biomedicine, physical AI, agent models, robotics, and autonomous driving The NVIDIA Nemotron model family’s various open source models were also highlights of the keynote. These cover speech, multimodal, retrieval-augmented generation, safety, and other areas. Jensen mentioned that Nemotron open source models performed well on multiple leaderboards and are being widely adopted by enterprises. What is physical AI? Dozens of models launched at once If large language models solve the "digital world," NVIDIA's next ambition is clearly to conquer the "physical world." Jensen said that for AI to understand physical laws and exist in the real world, data is extremely scarce. Besides the agent open source model Nemotron, he proposed the core architecture of "three computers" for building physical AI.

NVIDIA's open source model ecosystem covers biomedicine, physical AI, agent models, robotics, and autonomous driving The NVIDIA Nemotron model family’s various open source models were also highlights of the keynote. These cover speech, multimodal, retrieval-augmented generation, safety, and other areas. Jensen mentioned that Nemotron open source models performed well on multiple leaderboards and are being widely adopted by enterprises. What is physical AI? Dozens of models launched at once If large language models solve the "digital world," NVIDIA's next ambition is clearly to conquer the "physical world." Jensen said that for AI to understand physical laws and exist in the real world, data is extremely scarce. Besides the agent open source model Nemotron, he proposed the core architecture of "three computers" for building physical AI.

The training computer, which we know as machines built from various training-grade GPUs, like the GB300 architecture shown in the image.

The inference computer, the "cerebellum" running at the robot or vehicle edge, responsible for real-time execution.

The simulation computer, including Omniverse and Cosmos, providing AI with a virtual training environment to learn physical feedback through simulation.

The Cosmos system can generate massive amounts of physical-world AI training environments Based on this architecture, Jensen officially launched the show-stopping Alpamayo, the world’s first autonomous driving model with reasoning and inference abilities.

The Cosmos system can generate massive amounts of physical-world AI training environments Based on this architecture, Jensen officially launched the show-stopping Alpamayo, the world’s first autonomous driving model with reasoning and inference abilities.  Unlike traditional autonomous driving, Alpamayo is an end-to-end trained system. Its breakthrough is in solving the "long tail problem" of autonomous driving. When facing complex, never-seen-before road conditions, Alpamayo doesn't rigidly execute code but reasons like a human driver. "It will tell you what it’s going to do next, and why it made that decision." In the demo, the vehicle drove with astonishing naturalness, breaking down extremely complex scenarios into basic common sense. Beyond the demo, this isn't just theory. Jensen announced that the Mercedes CLA, equipped with the Alpamayo tech stack, will officially launch in the US in Q1 this year, followed by Europe and Asia.

Unlike traditional autonomous driving, Alpamayo is an end-to-end trained system. Its breakthrough is in solving the "long tail problem" of autonomous driving. When facing complex, never-seen-before road conditions, Alpamayo doesn't rigidly execute code but reasons like a human driver. "It will tell you what it’s going to do next, and why it made that decision." In the demo, the vehicle drove with astonishing naturalness, breaking down extremely complex scenarios into basic common sense. Beyond the demo, this isn't just theory. Jensen announced that the Mercedes CLA, equipped with the Alpamayo tech stack, will officially launch in the US in Q1 this year, followed by Europe and Asia.  This car was rated the world’s safest by NCAP, thanks to NVIDIA's unique "dual safety stack" design. If the end-to-end AI model is uncertain about road conditions, the system instantly switches to a traditional, more reliable safety mode to ensure absolute safety. At the launch, Jensen also showcased NVIDIA’s robotics strategy.

This car was rated the world’s safest by NCAP, thanks to NVIDIA's unique "dual safety stack" design. If the end-to-end AI model is uncertain about road conditions, the system instantly switches to a traditional, more reliable safety mode to ensure absolute safety. At the launch, Jensen also showcased NVIDIA’s robotics strategy.  The competition among nine top AI and related hardware manufacturers—all expanding product lines and vying for the robotics sector. The highlighted cells show new products since last year. All robots will be equipped with Jetson mini computers, trained in the Isaac simulator on the Omniverse platform. NVIDIA is also integrating this technology into industrial systems like Synopsys, Cadence, and Siemens.

The competition among nine top AI and related hardware manufacturers—all expanding product lines and vying for the robotics sector. The highlighted cells show new products since last year. All robots will be equipped with Jetson mini computers, trained in the Isaac simulator on the Omniverse platform. NVIDIA is also integrating this technology into industrial systems like Synopsys, Cadence, and Siemens.  Jensen invited humanoid and quadruped robots from Boston Dynamics, Agility, and others onstage, emphasizing that the biggest robot is actually the factory itself. From the ground up, NVIDIA’s vision is that future chip design, system design, and factory simulation will all be accelerated by NVIDIA’s physical AI. Once again, Disney robots made a dazzling appearance at the launch. Jensen joked to these adorable robots: "You’ll be designed in the computer, manufactured in the computer, and even tested and verified in the computer before you ever face gravity in the real world."

Jensen invited humanoid and quadruped robots from Boston Dynamics, Agility, and others onstage, emphasizing that the biggest robot is actually the factory itself. From the ground up, NVIDIA’s vision is that future chip design, system design, and factory simulation will all be accelerated by NVIDIA’s physical AI. Once again, Disney robots made a dazzling appearance at the launch. Jensen joked to these adorable robots: "You’ll be designed in the computer, manufactured in the computer, and even tested and verified in the computer before you ever face gravity in the real world."  If it weren’t for Jensen Huang, the whole keynote would feel like a model vendor’s product launch. With debates about an AI bubble running high these days—and Moore’s Law slowing—Jensen seems eager to use real AI capabilities to boost everyone’s confidence in AI. Besides unveiling the powerful new Vera Rubin AI supercomputing platform to satisfy the hunger for computing power, he spent more effort than ever on applications and software, doing everything possible to show us the tangible changes AI will bring. As Jensen himself said, in the past they made chips for the virtual world; now they’re stepping up and demonstrating, focusing on physical AI represented by autonomous driving and humanoid robots, and entering the more fiercely competitive real physical world. After all, only when the fight starts, can the arms trade keep going. *Finally, here’s the surprise video: Due to time limits at CES, Jensen didn’t get to present many of his PPT slides. So, he simply made a funny short video with all the slides he didn’t show. Enjoy⬇️

If it weren’t for Jensen Huang, the whole keynote would feel like a model vendor’s product launch. With debates about an AI bubble running high these days—and Moore’s Law slowing—Jensen seems eager to use real AI capabilities to boost everyone’s confidence in AI. Besides unveiling the powerful new Vera Rubin AI supercomputing platform to satisfy the hunger for computing power, he spent more effort than ever on applications and software, doing everything possible to show us the tangible changes AI will bring. As Jensen himself said, in the past they made chips for the virtual world; now they’re stepping up and demonstrating, focusing on physical AI represented by autonomous driving and humanoid robots, and entering the more fiercely competitive real physical world. After all, only when the fight starts, can the arms trade keep going. *Finally, here’s the surprise video: Due to time limits at CES, Jensen didn’t get to present many of his PPT slides. So, he simply made a funny short video with all the slides he didn’t show. Enjoy⬇️

0

0

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

PoolX: Earn new token airdrops

Lock your assets and earn 10%+ APR

Lock now!

You may also like

BlockDAG Positions as the Next Crypto to Explode While Shiba Inu Coin Price & Solana Crypto Price Search for Support

BlockchainReporter•2026/01/08 18:00

Marqeta, MongoDB, Twilio, Asana, and BILL Stocks Tumble—Key Information You Should Be Aware Of

101 finance•2026/01/08 17:54

Why Broadcom (AVGO) Stock Is Declining Today

101 finance•2026/01/08 17:54

Bitcoin Could Fall to $50,000 Amid Ongoing Gold Rally, Says Bloomberg Analyst

Coinspeaker•2026/01/08 17:51

Trending news

MoreCrypto prices

MoreBitcoin

BTC

$91,319.14

+0.14%

Ethereum

ETH

$3,131.41

-0.62%

Tether USDt

USDT

$0.9994

+0.02%

XRP

XRP

$2.16

-1.65%

BNB

BNB

$889.84

-0.78%

Solana

SOL

$138.33

+2.04%

USDC

USDC

$1

+0.03%

TRON

TRX

$0.2947

-1.04%

Dogecoin

DOGE

$0.1429

-2.67%

Cardano

ADA

$0.3958

-2.02%

How to buy BTC

Bitget lists BTC – Buy or sell BTC quickly on Bitget!

Trade now

Become a trader now?A welcome pack worth 6200 USDT for new users!

Sign up now