From cloaks that render objects invisible to advanced AI semiconductors: Neurophos secures $110 million to develop compact optical processors for AI inference

Revolutionizing AI Hardware: Neurophos and the Future of Photonic Computing

Two decades ago, Duke University’s David R. Smith pioneered the use of metamaterials—engineered composites with unique properties—to create an early version of an invisibility cloak. Although this device could only obscure objects from a single microwave wavelength, the breakthroughs in material science laid the groundwork for new advances in electromagnetism.

Building on this legacy, Neurophos, a photonics company based in Austin and spun out of Duke University and Smith’s Metacept incubator, is pushing the boundaries of this research. Their mission: to address one of the most pressing challenges in artificial intelligence—scaling computational power without dramatically increasing energy consumption.

Introducing the Metasurface Modulator

Neurophos has developed a groundbreaking “metasurface modulator” with optical characteristics that allow it to function as a tensor core processor, performing matrix-vector multiplications central to AI workloads, especially inference. Unlike conventional GPUs and TPUs that rely on silicon-based transistors, Neurophos’s approach enables thousands of these modulators to be integrated onto a single chip. The company asserts that its optical processing unit (OPU) delivers much faster inference performance and greater efficiency than the silicon GPUs currently powering AI data centers.

Major Investment Fuels Innovation

To accelerate chip development, Neurophos has secured $110 million in Series A funding, led by Gates Frontier (Bill Gates’ investment arm), with additional backing from Microsoft’s M12, Carbon Direct, Aramco Ventures, Bosch Ventures, Tectonic Ventures, Space Capital, and others.

The Promise and Challenge of Photonic Chips

While photonic computing is not a new concept, its theoretical advantages over traditional silicon are significant: light generates less heat, travels faster, and is less affected by temperature and electromagnetic interference. However, photonic components are typically larger and harder to mass-produce, and require bulky, power-hungry converters to switch between digital and analog signals.

Neurophos claims its metasurface technology overcomes these hurdles by being approximately 10,000 times smaller than standard optical transistors. This miniaturization allows for thousands of units per chip, dramatically increasing parallel processing and efficiency compared to conventional silicon solutions.

“By shrinking the optical transistor, we can perform far more calculations in the optical domain before converting back to electronics,” explained Dr. Patrick Bowen, CEO and co-founder of Neurophos. “To achieve high speeds, energy efficiency must come first—otherwise, faster chips simply consume more power. Solving efficiency is the key to unlocking speed.”

Performance That Outpaces Industry Leaders

According to Neurophos, their OPU can dramatically outperform Nvidia’s B200 AI GPU. Their chip reportedly operates at 56 GHz, achieving a peak of 235 Peta Operations per Second (POPS) while using 675 watts, compared to the B200’s 9 POPS at 1,000 watts. The company has already secured several customers, and Microsoft is among those closely evaluating the technology.

Competing in a Crowded Market

Despite these advances, Neurophos faces stiff competition in a sector dominated by Nvidia, the world’s most valuable publicly traded company and a cornerstone of the AI industry. Other photonics startups, such as Lighmatter, have shifted focus to interconnects. Neurophos anticipates its first chips will be commercially available by mid-2028.

Bowen remains optimistic that the efficiency and speed of their metasurface modulators will set Neurophos apart from competitors.

“Most companies, including Nvidia, are making incremental improvements based on silicon physics, which are tied to TSMC’s manufacturing progress. Typically, TSMC nodes improve energy efficiency by about 15% every few years. Even projecting Nvidia’s architectural gains, by the time we launch in 2028, we expect to maintain a 50-fold advantage in both speed and efficiency over Blackwell,” Bowen stated.

Manufacturing and Future Plans

To address the traditional manufacturing challenges of optical chips, Neurophos designs its products to be compatible with standard silicon foundry processes and materials.

The new funding will support the creation of Neurophos’s first integrated photonic computing system, including data center-ready OPU modules, a comprehensive software stack, and early-access developer hardware. The company is also expanding its headquarters in Austin and opening a new engineering office in San Francisco.

“Modern AI inference requires enormous computational power and energy,” said Dr. Marc Tremblay, corporate vice president and technical fellow for core AI infrastructure at Microsoft. “We need a leap in computing capability to match the advances in AI models, and that’s exactly what Neurophos and its talented team are working to deliver.”

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

Will HBAR price rebound after Hedera’s partnership with McLaren Racing?

Ireland introduces legislation permitting law enforcement to deploy spyware

Musk’s SpaceX Selects Banks to Head IPO, According to Financial Times

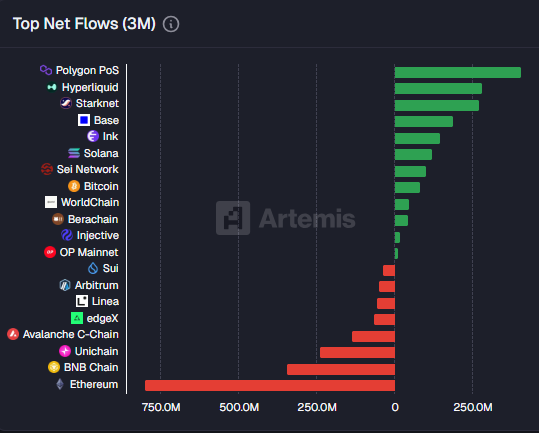

Polygon leads all chains in total inflows after Polymarket expansion