How decentralized AI training will create a new asset class for digital intelligence

Frontier AI — the most advanced general-purpose AI systems currently in development — is becoming one of the world’s most strategically and economically important industries, yet it remains largely inaccessible to most investors and builders. Training a competitive AI model today, similar to the ones retail users frequent, can cost hundreds of millions of dollars, demand tens of thousands of high‑end GPUs, and require a level of operational sophistication that only a handful of companies can support. Thus, for most investors, especially retail ones, there is no direct way to own a piece of the artificial intelligence sector.

That constraint is about to change. A new generation of decentralized AI networks is moving from theory to production. These networks connect GPUs of all kinds from around the world, ranging from expensive high‑end hardware to consumer gaming rigs and even your MacBook’s M4 chip, into a single training fabric capable of supporting large, frontier‑scale processes. What matters for markets is that this infrastructure does more than coordinate compute; it also coordinates ownership by issuing tokens to participants who contribute resources, which gives them a direct stake in the AI models they help create.

Decentralized training is a genuine advance in the state of the art. Training large models across untrusted, heterogeneous hardware on the open internet was, until recently, said to be an impossibility by AI experts. However, Prime Intellect has now trained decentralized models currently in production — one with 10 billion parameters (the quick, efficient all-rounder that’s fast and capable for everyday tasks) and another with 32 billion parameters (the deep thinker that excels at complex reasoning and delivers more nuanced, sophisticated results).

Gensyn, a decentralized machine-learning protocol, has demonstrated reinforcement learning that can be verified onchain. Pluralis has shown that training large models using commodity GPUs (the standard graphics cards found in gaming computers and consumer devices, rather than expensive specialized chips) in a swarm is an increasingly viable decentralized approach for large-scale pretraining, the foundational phase where AI models learn from massive datasets before being fine-tuned for specific tasks.

To be clear, this work is not just some research project—it’s already happening. In decentralized training networks, the model does not “sit” inside a single company’s data center. Instead, it lives across the network itself. Model parameters are fragmented and distributed, meaning no single participant owns the entire asset. Contributors supply GPU compute and bandwidth, and in return, they receive tokens that reflect their stake in the resulting model. This way, training participants don’t just serve as resources; they earn alignment and ownership in the AI they are creating. This is a very different alignment from what we see in centralized AI labs.

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

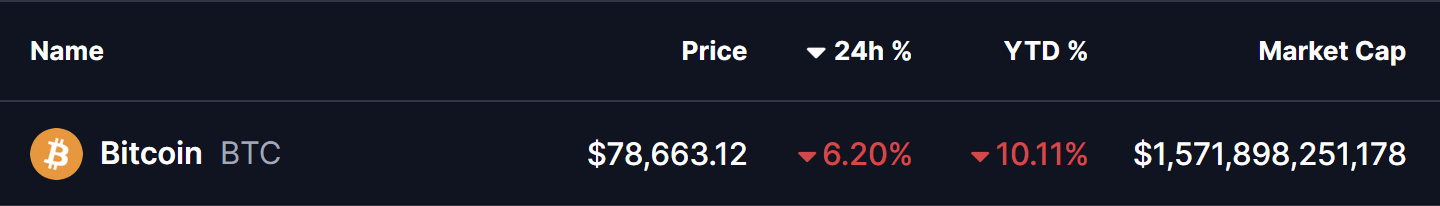

Bitcoin retreats below $77,000, Tether posts $10B annual profit, DOJ seizes $400M in Helix assets | Weekly recap

PEPE Slides as Whale Dumps $3M — Is More Downside Ahead?

Bitcoin Spot ETF Slumps in January- Key Bullish Fractal To Watch Out in February for $BTC

Bitcoin’s Rollercoaster: Fall and Possible Rebound